This is the review of the Container Security book.

(My) Conclusion

I have mixed feelings about this book; to a scale of 1 to 10 I would give it a 7.

What I appreciated about it:

- You can have a free (digital) copy of the book from here Aqua Container Security.

- All the Linux security mechanisms that are used under the hood by containers are very well explained with multiple (valuable) examples; namespaces, cgroups, capabilities, system calls, AppArmor, SecComp. At the end of the day, container security is just a subset of Linux security.

- No hidden (or un-hidden) publicity to any commercial tools, despite the fact that the author is working for AquaSecurity company.

- A lot of references towards Internet accessible resources; unfortunately, the author is using url shortening so I wish you good luck to copy them into a browser if you have the paper version of the book.

- Clear and concise writing style.

What I think could have been done better:

- Even if the book is about security of/in containers, there is no general introduction of the container notion or the actual container landscape.

- A lot of forward references in different chapters; usually in technical books you find backward references because (very often) the knowledge is build on top of the knowledge of previous chapters.

- There are a few chapters which are very thin, especially toward the end; the last chapter (chapter 14) for example is just 2 pages long.

- There is a companion website (https://containersecurity.tech/) but it contains just a single page.

1. Container Security Threats

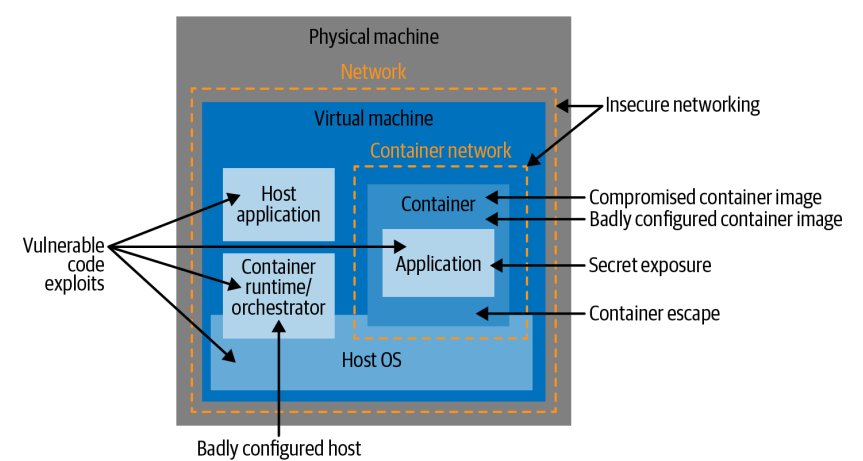

This chapter defines different attack vectors for the containers and the infrastructure that they are running on. This attack vectors specifically linked to containers are:

- Application code vulnerabilities

- Badly configured images

- Badly configured containers

- Build Image attack

- Supply chain attack

- Vulnerable hosts

- Exposed secrets

- Insecure networking

- Container runtime vulnerabilities

The containers very often are deployed on cloud infrastructures very often using a multi-tenant model which brings new threats and new attack vectors on top of previous ones.

After presenting and explaining the problems that usage of containers will bring the author is focusing on (security) general guidelines that should be used when implementing different mitigations controls:

- least privilege

- each container should have a minimum set of permissions to fulfill it’s function.

- defense in depth

- reducing the attack surface

- split the monolithic application in smaller, simpler microservices that would imply a less complex architecture that would reduce the attack surface.

- limiting the blast radius

- if one container is compromised some controls should be put in place to not affect the others software components

- segregation of duties

- permissions and credentials can be passed only into the containers that need them

2. Linux System Calls, Permissions and Capabilities

This chapter it presents the basics of Linux System calls, the Linux file permissions (an extensive explanation is done on the usage of of setuid and getuid) and the Linux Capabilities. For each of this Linux features some examples are given and the author emphasizes that this capabilities are heavily used by the containers and the containers run-times because at the end of the day, a container is just a Linux process running on a host.

3. Control Groups

This chapter is very similar with the previous one in the sense that it does not speak about containers but about a Linux security feature that is heavily used by the containers. This chapter is dedicated to Linux control groups (a.k.a cgroups) which have as goal to limit the resources, such as memory, CPU, network input/output, that a process or a group of processes can use.

Containers runtimes are using cgroups behind the scene to limit resources used by containers, so cgroups provides protection against a class of attacks that attempt to disrupt running applications by consuming excessive resources, thereby starving legitimate applications.

4. Container Isolation

This chapter treats another Linux feature that is cornerstone for container security: Linux namespaces.

Linux namespaces are a feature of the Linux kernel that partitions kernel resources such that one or more processes sees one set of resources while another set of processes sees a different set of resources. If cgroups control the resources that a process can use, namespaces control what it can see.

For each of the existing namespaces (Unix Timesharing System, Process IDs, Mount Points, Network, Users and Group Ids, Inter-Process Communications) the author shows how can be created from command line. For some namespaces a comparison is done between the isolation implemented by a container runtime and the isolation offered just using the tools offered out of the box by Linux.

5. Virtual Machines

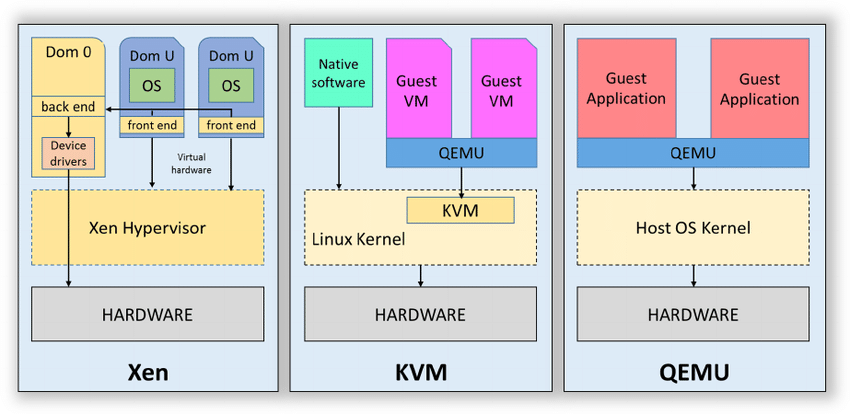

This chapter is an introduction to virtual machines. It is explained different types of hypervisors (a.k.a VMM – Virtual Machine Monitor):

- Type1 – the hypervisor is installed directly on top of the hardware with no operating system underneath (ex: Hyper-V, Xen)

- Type2 – the hypervisor is installed on top of a Host Os (ex: VirtualBox, Parallels, QEMU)

- Kernel Based Virtual Machines – this is a kind of hybrid type because it consists in a hypervisor running within the kernel of the hos Os (ex: Linux KVM).

After describing the types of hypervisors the author explained how the hypervisors are achieving the virtualization via a mechanism called “trap and emulate“. When an OS is running as a virtual machine in a hypervisor, some of its instructions may conflict with the host operation system. So the hypervisor will emulates the effect of that specific instruction or action without carrying it out. In this way, the host OS is not effected by the guest’s actions.

The chapter is concluded with the advantages of hypervisors for process isolation compared with the kernel processes (which are the cornerstone of containers) and the main drawbacks of hypervisors.

From the process isolation point of view the hypervisors are offering a greater isolation and the difference is that hypervisors have a simpler job to fulfill comparing with OS kernels. In a kernel, user space processes are allowed some visibility of each other, but there is no sharing of memory or sharing of processes in the case of hypervisors.

On the drawback side, the VMs have start-up times that are several orders of magnitude greater than a container, containers give developers a convenient ability to “build once, run anywhere” quickly and efficiently, each virtual machine has the overhead of running a whole kernel compared with containers that are sharing a kernel so containers can be very efficient in both resource use and performance.

6. Container Images

This chapter is focusing on the images; it starts by explaining the OCI standards covering the image specification. In this chapter you will be able to see how different topics from previous chapters (namespaces, capabilities, control groups, root file system) are fitting together so the end user can define, build and execute a container.

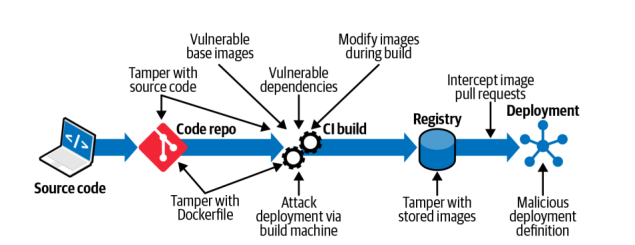

The second par of the chapter is focusing on different attack vectors on an image:

Some of this attack vectors are not really linked to container technology (tamper source code, vulnerable dependencies, attack deployment via build machine) but others are container specific attack vectors (tamper the docker file, usage of vulnerable base images, modify images during build).

7. Software Vulnerabilities in Images

The chapter is dedicated to vulnerabilities managements in general and also in the context of containers. For the general/generic part, the author explains what is the workflow when a vulnerability is discovered:

after the discovery the person the new issue will get a unique identifier that begins with “CVE” (Common Vulnerabilities and Exposures) , followed by the year and an unique id.

- A responsible security disclosure is agreed between the entity that found the vulnerability and the entity that “owns” the software. Both parties agree on a timeframe after which the researcher can publish their findings.

- The entity that “owns” the software is fixing the vulnerability and delivers a patch.

- Once the vulnerability can be disclosed, it receive a unique identifier that begins with “CVE,” which stands for Common Vulnerabilities and Exposures.

Strangely enough, the author does not mention the usage of CVSS (Common Vulnerability Scoring System) score of a vulnerability. Usually CVSS score is used to judge the impact of the vulnerability.

The second part of the chapter is focusing on ways to handle the vulnerability management in the context of containers. A few interesting and valuable ideas:

- (always) use immutable containers :

- If containers are downloading code at runtime, different instances of the container could be running different versions of that code, but it would be difficult to know which instance is running what version.

- It’s harder to control and ensure the provenance of the software running in each container if it could be downloaded at any time and from anywhere.

- Building a container image and storing it in a registry is very simple to automate in a CI/CD pipeline.

- regular scan of images.

- Regularly re-scanning container images allows the scanning tool to check the contents against its most up-to-date knowledge about vulnerabilities. A very common approach is to re-scan all deployed images every 24 hours, in addition to scanning new images as they are built, as part of an automated CI/CD pipeline.

- use a tool that can do more than scanning for vulnerabilities (if possible). A (non-exhaustive) list of extra features that the scanner could have:

- Known malware within the image

- Executables with the setuid bit

- Images configured to run as root

- Secret credentials such as tokens or passwords

- Sensitive data in the form of credit card or Social Security numbers or something similar

8. Strengthening Container Isolation

This chapter is an extension of the Chapter 4 (Container Isolation); it presents other ways to extend the container isolation using mechanisms and framework beyond the Linux kernel features.

The first part of the chapter presents mechanisms already present in Linux ecosystem that can be used in other contexts than containers, namely:

- Seccomp

- Seccomp is a mechanism for restricting the set of system calls that an application is allowed to make.

- The Docker default seccomp profile blocks more than 40 of the 300+ syscalls (including all the examples just listed) without ill effects on the vast majority of containerized applications. Unless you have a reason not to do so, it’s a good default profile to use.

- AppArmor

- In AppArmor, a profile can be associated with an executable file, determining what that file is allowed to do in terms of capabilities and file access permissions.

- AppArmor implement mandatory access controls. A mandatory access control is set by a central administrator, and once set, other users do not have any ability to modify the control or pass it on to another user.

- There is a default Docker AppArmor profile

- SELinux

- SElinux lets you constrain what a process is allowed to do in terms of its interactions with files and other processes. Each process runs under an SELinux domain and every file has a type.

- Every file on the machine has to be labeled with its SELinux information before you can enforce policies. These policies can dictate what access a process of a particular domain has to files of a particular type.

In the second part of the chapter the author presents container specific technologies that could be used to enforce the containers isolation:

- gVisor

- gVisor provides a virtualized environment in order to sandbox containers. The system interfaces normally implemented by the host kernel are moved into a distinct, per-sandbox application kernel in order to minimize the risk of a container escape exploit.

- To do this, a component of gVisor called the Sentry intercepts syscalls from the application. Sentry is heavily sandboxed using seccomp, such that it is unable to access filesystem resources itself. When it needs to make systemcalls related to file access, it off-loads them to an entirely separate process called the Gofer. Even those system calls that are unrelated to filesystem access are not passed through to the host kernel directly but instead are reimplemented within the Sentry. Essentially it’s a guest kernel, operating in user space.

- Kata Containers

- The idea with Kata Containers is to run containers within a separate virtual machine. This approach gives the ability to run applications from regular OCI format container images, with all the isolation of a virtual machine.

- Kata uses a proxy between the container runtime and a separate target host where the application code runs. The runtime proxy creates a separate virtual machine using QEMU to run the container on its behalf.

- Firecracker

- Is a virtual machine offering the benefits of secure isolation through a hypervisor and no shared kernel, but with startup times around 100ms.

- Firecracker designers have stripped out functionality that is generally included in a kernel but that isn’t required in a container like enumerating devices. The main saving comes from a minimal device model that strips out all but the essential devices.

9. Breaking Container Isolation

After explaining in previous chapters what can be done to enhance the container isolation, this chapter is focusing on how a container could be misconfigured so this isolation is broken.

The following misconfigurations are explained:

Run containers using the default (root) user.

Unless your container image specifies a non-root user or you specify a non default user when you run a container, by default the container will run as root.

The best option is to define a custom user inside the container but if this option is not available then a few other options are presented:

- override the user id; this is possible in Docker using the –user flag of the docker run command.

- use user namespaces (covered in chapter 2) within the container, so that root inside the container is not the same as root on the host. You can enable the use of user namespaces in Docker, but it’s not turned on by default. If you’re interested about how to do it please take a look to Isolate containers with a user namespace

The use of —priviledged flag

The usage of priviledged flag give extended (Linux) capabilities to the process representing the running container. Docker introduced the –privileged flag to enable DinD (Docker in Docker) which can be used by build tools(very often in the CI/CD context) running as containers, which need access to the Docker daemon in order to use Docker to build container images.

Mounting sensitive directories

Mounting inside the containers the root file system or specific host folders is not a very good idea. List of folders to avoid mounting:

- Mounting /etc would permit modifying the host’s /etc/passwd file from within the container.

- Mounting /bin, /usr/bin or /usr/sbin would allow the container to write executables into the host directory.

- Mounting host log directories into a container could enable an attacker to modify or erase the logs.

Mounting the Docker Socket

In a Docker environment, there is a Docker daemon process that essentially does all the work. When you run the docker command-line utility, this sends instructions to the daemon over the Docker socket that lives at /var/run/docker.sock . Any entity that can write to that socket can also send instructions to the Docker daemon. The daemon runs as root and will happily build and run any software of your choosing on your behalf.

Accessing the Docker Daemon via REST API with no authentication

This in not really mentioned in the book (even that I think that it should) but it’s very similar with the previous paragraph. The docker daemon can be also accessed via a REST API; by default the API is accessible with no authentication.

Sharing namespaces between the container and the host

Containerized processes are all visible from the host; thus, sharing the process namespace to a container lets that container see the other containerized processes.

10. Container Network Security

The chapter starts with an introduction to ISO/OCI networking model and this model is used during the chapter to explain different topics related to network security. The author is focusing on explaining the networking model for containers running under Kubernetes orchestrator but even if you’re not interested on K8s it is still possible to find some technology agnostic best practices:

- Default Deny Ingress: define a network policy that denies ingress traffic by default and then add policies to permit traffic only where you expect it

- Default Deny Egress: Same as the Ingress part.

- Restricts ports: Restrict traffic so that it is accepted only to specific ports for each application.

11. Securely Connecting Components with TLS

Most of the chapter content have noting to do with containers (this is highlighted even by the author itself) and is treating the history of SSL/TLS protocol and the basics of PKI : Public/Private Key, X509 certificates, Certificate Signing Requests, Certificate Revocation and Certificate Authorities.

The only piece of information linked to containers that I found important is the that rather than writing your own code to set up secure connections, you can choose to use a service mesh to do it for you.

12. Passing Secrets to Containers

The chapter starts by enumerating properties that a secret must have:

- it should be stored in encrypted form so that it’s not accessible to every user or entity.

- it should never be written to disk unencrypted (and even better just held it in memory and never write it on disk).

- it should be revocable (make them invalid in the event that the secret should no longer be trusted).

- it should be able to rotate it.

- it should be independent of the lifecycle of the consumers.

- only software components that need the access to it should be able to read the secret

Next paragraph enumerates different ways of injecting information (secrets included) into containers:

- store the information into the image

- obviously this is not a very good idea for secrets because can be accessed by anyone having the image and it cannot be changed unless the images are rebuild.

- use environment variables as part of the configuration that goes along with the image

- same problems as the hard-coded secrets

- pass the secret over the network

- the running container will make the appropriate network calls to retrieve or receive the information.

- in this case the date(secret) in transit should be encrypted, most probably using a service mesh

- the principal drawback of this approach is how the container will be able to authenticate to this service offering the secret; the author does not offer any solution

- pass the secrets at runtime using the environment variables.

- environment variables defined for the container can be seen using different commands like docker inspect.

- pass the secrets through files.

- This option consists in write the secrets into files that the container can access through a mounted volume.

- Combining this with a secure secrets store ensures that secrets are never stored “at rest” unencrypted.

I found this chapter rather strange because it explains how to not pass secrets to containers instead of presenting the good practices. Speaking about good practices, this are very briefly mentioned like the usage of a third-party (commercial) solution for secret storage. I would have preferred to have more insights on how this tools are working.

13. Container Runtime Protection

This chapter treats the controls to put in place in order to assure the protection of the running containers.

The first idea is to compute a container profile. This profile should be computed prior to the deployment of the container in live and should contains the normal behavior of the container. Once this profile is known, then at runtime a (container security)tool would be able to compare the profile with the real behavior of the container and detect any discrepancy.

This container profile could contains the following information:

- network traffic – the other containers and or hosts that the container normally communicates with.

- executable – what kind of commands the normal cunning container is executing. In this case the author suggests to use eBPF (which stands for extended Berkeley Packet Filter) technology.

- file access – what files from the container file system are usually accessed.

- user IDs – as a general rule, if the container is doing one job, it probably needs to operate under only one user identity.

- (Linux) capabilities – the (minimal) list of capabilities the container needs in order to execute properly; any attempt to use a capability not present in the list should raise a red flag.

The second idea presented is the drift prevention. It’s considered best practice to treat containers as immutable. The container is instantiated from its image, and then the contents of the container should not change. In the case of drift prevention the (container security) tool will be able to make the difference between the software that came from the image, and the software that is running in the workload so it gives the ability to immediately stop any software that doesn’t belong to the (original) image.

14. Containers and the OWASP Top 10

This sounds a very interesting topic but unfortunately the author it expedite it very fast. In some of the cases the author is even confessing that the type of risk is not linked to containers and could be applied to non containers world also.

Same OWASP Top 10 (2017) have direct applicability in the container word:

- Broken Authentication.

- This can be linked with the usage of secrets in container word. These secrets need to be stored with care and passed into containers at runtime, as discussed in Chapter 12.

- The containerized applications that must communicate between them would need to identify each other using certificates, and communicate using secure connections. This can be handled directly by containers, or you can use a service mesh

- Broken Access Control

- Some container-specific approaches to mitigate least privilege the abuse of privileges that may be granted unnecessarily to users or components:

- Don’t run containers as root.

- Limit the capabilities granted to each container.

- Use seccomp, AppArmor, or SELinux.

- Use immutable containers

- Some container-specific approaches to mitigate least privilege the abuse of privileges that may be granted unnecessarily to users or components:

- Insufficient Logging and Monitoring

- Following container events should be logged:

- Container start/stop events

- Access to secrets

- Any modification of privileges

- Modification of container payload

- Inbound and outbound network connections

- Volume mounts

- Failed actions such as attempts to open network connections, write to files, or change user permissions.

- Following container events should be logged:

- Failed actions such as attempts to open network connections, write

- to files

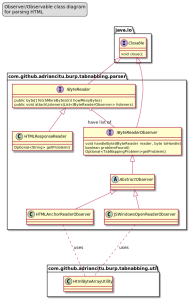

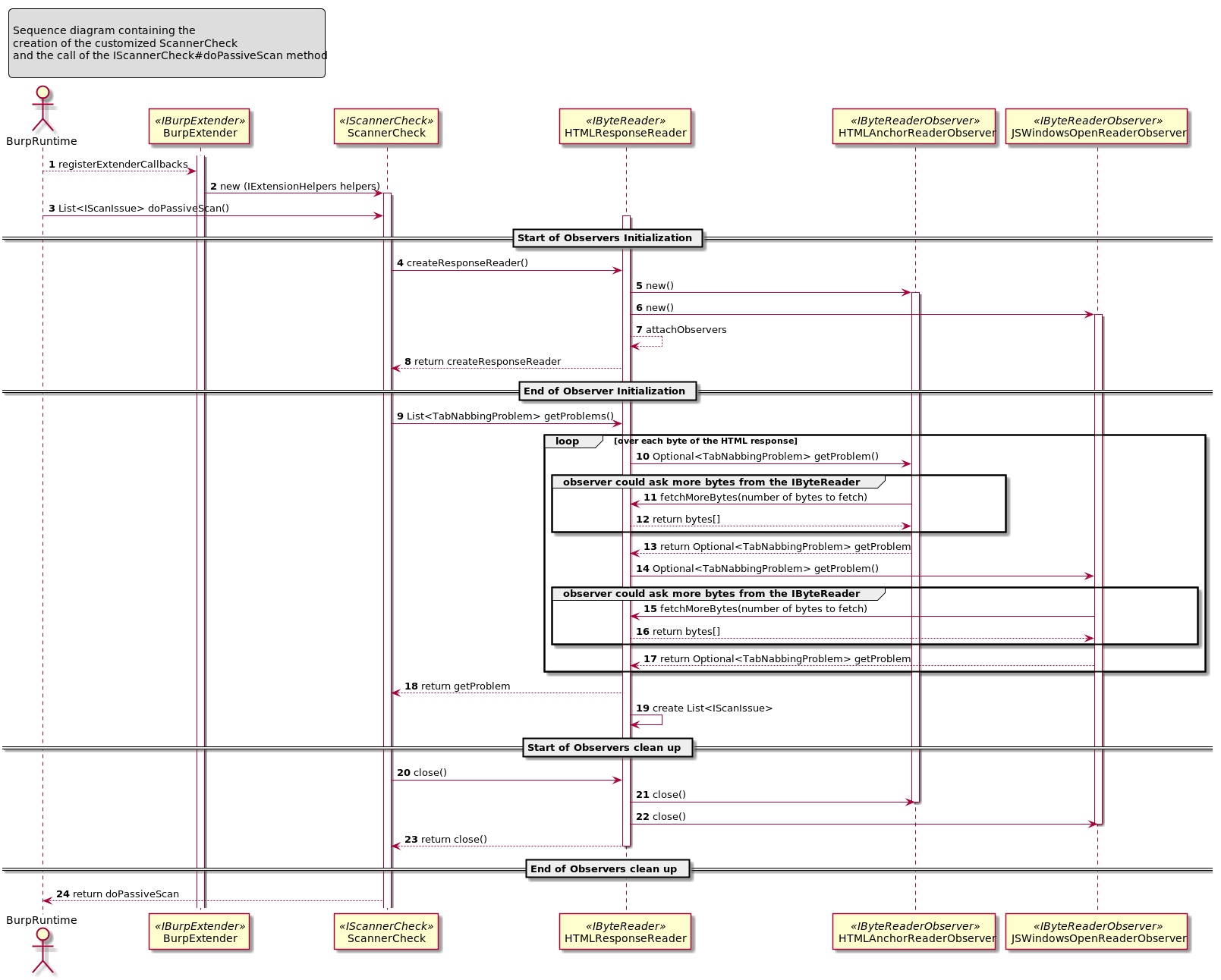

The goal of this ticket is to explain how to create an extension for the Burp Suite Professional taking as implementation example the “Reverse Tabnabbing” attack.

The goal of this ticket is to explain how to create an extension for the Burp Suite Professional taking as implementation example the “Reverse Tabnabbing” attack.

You must be logged in to post a comment.