Context

I am the maintainer of a BurpSuite extension that is implementing a REST API on top of Burp Suite. The goal of this REST API is to offer basic actions (retrieve a report, trigger a scan, retrieve the list of scanned url) and is executed on a headless Burp Suite from a CICD pipeline.

From the technical point of view, the extension is implemented in Java and I’m using the JAX-RS specification in order to implement the REST-APIs and Jersey as JAX-RS implementation.

Problem

One of the REST entry points was returning a Set<OBJECT> where OBJECT is a POJO specific to the extension. When a client was calling this entry point, the following exception was thrown:

Caused by: java.lang.ClassNotFoundException: org.eclipse.persistence.internal.jaxb.many.CollectionValue

at java.base jdk.internal.loader.BuiltinClassLoader.loadClass(BuiltinClassLoader.java:641)

at java.base/jdk.internal.loader.ClassLoaders$AppClassLoader.loadClass(ClassLoaders.java:188)

at java.base/java.lang.ClassLoader.loadClass(ClassLoader.java:520)

at org.eclipse.persistence.internal.jaxb.JaxbClassLoader.loadClass(JaxbClassLoader.java:110)

Root Cause

A ClassNotFoundException is thrown when the JVM tries to load a class that is not available in the classpath or when there is a class loading issue. I was sure that the missing class (CollectionValue) was in the extension classpath so the root cause of the problem was a class loading issues.

In Java the classes are loaded by a Java classloader. A Java classloader is a component of the Java Virtual Machine (JVM) responsible for loading Java classes into memory at runtime. The classloader’s primary role is to locate and load class files from various sources, such as the file system, network.

Classloaders in Java typically follow a hierarchical delegation model. When a class is requested for loading, the classloader first delegates the request to its parent classloader. If the parent classloader cannot find the class, the child classloader attempts to load the class itself. This delegation continues recursively until the class is successfully loaded or all classloaders in the hierarchy have been exhausted.

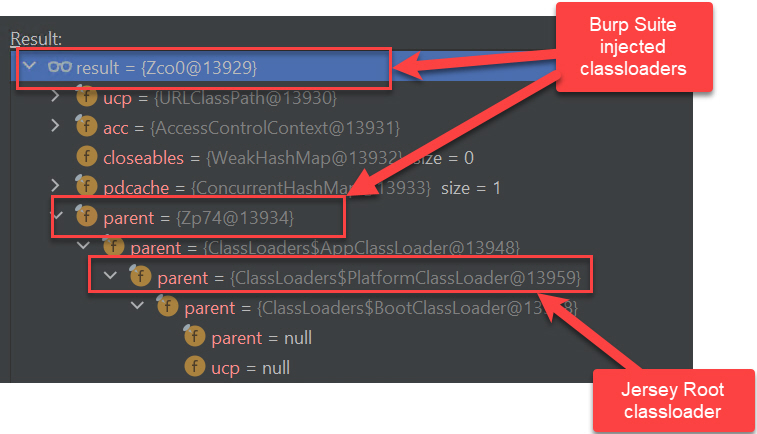

The classloader hierarchy of a thread that is serving a JAX-RS call looks like this:

The classloader hierarchy of the thread that is executing the Burp Suite extension looks like this:

So, the root cause of the ClassNotFoundException is that the classloader hierarchy of the threads serving the JAX-RS calls it does not include the (Burp Suite) extension classloader and so none of the classes from the (Burp Suite) extension classpath can be loaded by the JAX-RS calls.

Solution

The solution is to create a custom classloader that will have to be injected into the classloader hierarchy of the threads serving the JAX-RS calls. This custom classloader will implement the delegation pattern and will contains the original JAX-RS classloader and the Burp Suite extension classloader.

The custom classloader will delegate all the calls to the original Jersey classloader and in the case of loadClass method (which is throwing a ClassNotFoundException) if the Jersey classloader is not finding a class then it will delegate the call to the Burp Suite extension classloader.

The custom classloader will look like this:

public class CustomClassLoader extends ClassLoader{

private final ClassLoader burpClassLoader;

private final ClassLoader jerseyClassLoader;

public CustomClassLoader(

ClassLoader bcl,

ClassLoader jcl){

this.burpClassLoader = bcl;

this.jerseyClassLoader = jcl;

}

@Override

public String getName(){

return "CustomJerseyBurpClassloader";

}

@Override

public Class<?> loadClass(String name)

throws ClassNotFoundException {

try {

return this.jerseyClassLoader.loadClass(name);

} catch (ClassNotFoundException ex) {

//use the Burp classloader if class cannot be load from the jersey classloader

return this.burpClassLoader.loadClass(name);

}

}

//all the other methods implementation will just delegate

//to the return jerseyClassLoader

//for ex:

@Override

public URL getResource(String name) {

return return this.jerseyClassLoader.getResource(name);

}

.......

}

Now, we have the custom classloader; what is missing is to replace the original Jersey classloader with the custom one for each REST call of the API. In order to do this, we will create a Jersey ContainerRequestFilter which will be called before the execution of each request.

The request filter will look like this:

public class ClassloaderSwitchFilter

implements ContainerRequestFilter {

@Override

public void filter(ContainerRequestContext requestContext)

throws IOException {

Thread currentThread = Thread.currentThread();

ClassLoader initialClassloader =

currentThread.getContextClassLoader();

//custom classloader already injected

if (initialClassloader instanceof CustomClassLoader) {

return;

}

ClassLoader customClassloader =

new CustomClassLoader(

CustomClassLoader.class.getClassLoader(),

initialClassloader);

currentThread.setContextClassLoader(customClassloader);

}

}

You must be logged in to post a comment.